Miguel Aguilera complex systems, neuroscience and cognition

Scope:

My research line is guided by the ambition of using methods from different branches of complex systems research and related areas (statistical mechanics, information theory, machine learning and nonlinear dynamics) to study the closed-loop ongoing interaction of intelligent agents with their environments. I work with methods from nonequilibrium physics to study neural systems in interaction with their environment as open, nonequilibrium systems, which often challenge mathematical and modelling methods assuming linearity or asymptotic equilibrium. My goal is to apply such methods to address open problems and theoretical challenges related to leading theories in neuroscience and as well as broader research perspectives on life and mind.

Nonequilibrium neural computation

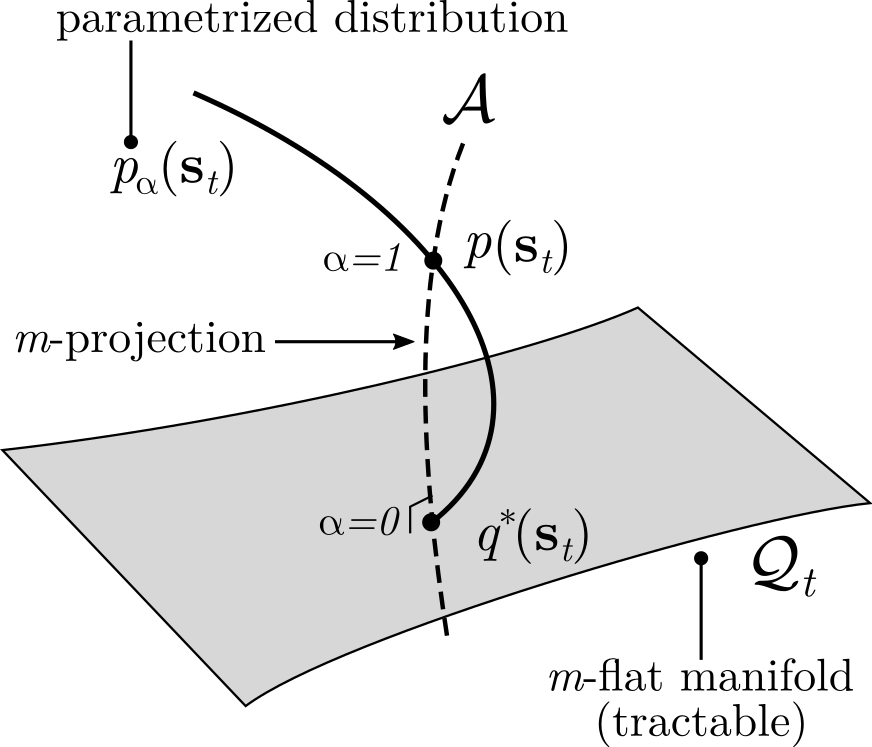

Neural systems operate far from equilibrium, changing with sensory streams and internal states in time-asymmetric, irreversible dynamics. Often, classical neuroscience theory focuses on an equilibrium information paradigm (e.g. efficient coding), which is insufficient to describe how nervous systems organize and adapt to thrive out of equilibrium. With Hideaki Shimazaki and collaborators as Amin Moosavi and Masanao Igarashi we are interested in developing methods for studying precisely nonequilibrium neural systems, including mean field methods based on information geometry preserving irreversible fluctuations in the system, or exact calculations describing the stochastic thermodynamics of disordered nonequilibrium systems.

- Aguilera, M, Igarashi, M & Shimazaki H (2023). Nonequilibrium thermodynamics of the asymmetric Sherrington-Kirkpatrick model. Nature Communications 14, 3685.

- Aguilera, M, Moosavi, SA & Shimazaki H (2021). A unifying framework for mean-field theories of asymmetric kinetic Ising systems. Nature Communications 12, 1197.

Integrated Information Theory

Integrated Information Theory (IIT) is a popular mathematical framework for understanding integration in neural systems and its significance to processes like consciousness and awareness. However, the computational cost of these measures generally precludes applications beyond relatively small systems, and it is not yet well understood how integration scales up with the size of a system nor how a system maintains integration as it interacts with its environment. In collaboration with Ezequiel Di Paolo we showed using mean-field approximations ho measures of information integration scale when a neural network becomes very large, connecting it with theories on critical phase transitions.

- Aguilera, M & Di Paolo, EA (2019). Integrated information in the thermodynamic limit. Neural Networks, Volume 114, pp 136-146.

- Aguilera, M & Di Paolo, EA (2021). Critical integration in neural and cognitive systems: Beyond power-law scaling as the hallmark of soft assembly. Neuroscience & Biobehavioral Reviews 123.

Self-organized criticality and adaptation

Many biological and neural systems do not operate deep within one or other regime of activity. Instead, they are poised at critical points located at phase transitions in their parameter space displaying non-trivial patterns like avalanches and large fluctuations. Here, we designed a learning rule that maintains invariances in the structure of a network corresponding to a universality class of critical systems. We implemented this rule in artificial robotic agents in two classical reinforcement learning scenarios and showed that in both cases the neural controller reaches a critical point maximizing the complexity of the agent behaviour, suggesting that adaptation to criticality can be used as a general adaptive mechanism.

- Aguilera, M & Bedia, MG (2018). Adaptation to criticality through organizational invariance in embodied agents. Scientific Reports volume 8, Article number: 7723 (2018).

- Aguilera M, Bedia MG and Barandiaran XE (2016) Extended Neural Metastability in an Embodied Model of Sensorimotor Coupling. Frontiers in Systems Neuroscience 10:76.

- Aguilera M, Barandiaran XE, Bedia MG, Seron F (2015) Self-Organized Criticality, Plasticity and Sensorimotor Coupling. Explorations with a Neurorobotic Model in a Behavioural Preference Task. PLoS ONE 10(2): e0117465.